Posters

Abstract

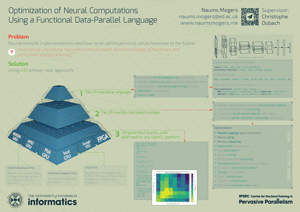

Performance-portable code is hard to produce due to diversity and heterogeneity of the state-of-the-art hardware platforms. Even more complex is the task of optimizing Artificial Neural Networks (ANNs) towards multiple hardware platforms. Manual optimization is expensive, while modern automated tools either support a narrow set of platforms or do not exploit individual strengths of different platforms to the fullest.

The functional data-parallel language Lift was shown to be performance-portable; the performance of the compiled OpenCL code is on par or better than that of highly tuned platform-specific libraries. This project aims to extend the method to the domain of Artificial Neural Networks by integrating domain-specific optimisations into the rewrite rules-based Lift compiler.

Optimizational methods of interest

- Parallel mappings space exploration

- Memory tiling

- Memory coalescing

- Approximate computations

- Float quantization

- Neuron pruning

- Training batch size autotuning

- Varying precision across layers and neurons

- Convolution kernel decomposition

- Sharing 32-bit registers

- OpenCL kernel fusion

- Expression simplification

- Proprietary instruction sets usage

Publications

- Naums Mogers, Lu Li, Valentin Radu, and Christophe Dubach: Mapping Parallelism in a Functional IR through Constraint Satisfaction: A Case Study on Convolution for Mobile GPUs ; ACM SIGPLAN 2022 International Conference on Compiler Construction

- Naums Mogers, Valentin Radu, Lu Li, Jack Turner, Michael O'Boyle, and Christophe Dubach: Automatic Generation of Specialized Direct Convolutions for Mobile GPUs ; General Purpose Processing Using GPU (GPGPU) 2020

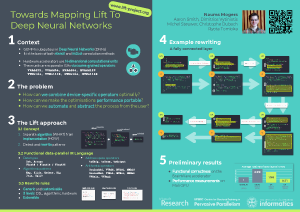

- Naums Mogers, Aaron Smith, Dimitrios Vytiniotis, Michel Steuwer, Christophe Dubach, Ryota Tomioka: Towards Mapping Lift to Deep Neural Network Accelerators ; Workshop on Emerging Deep Learning Accelerators (EDLA) 2019 @ HiPEAC